Artificial Intelligence (AI) is no longer a futuristic idea — it powers search engines, customer service chatbots, medical diagnostics, hiring tools, and even self-driving cars. But as AI becomes more integrated into everyday life, new challenges emerge. Questions about bias, ethics, and trust are at the center of debates among policymakers, businesses, and the public.

The truth is, AI is only as fair and trustworthy as the data, systems, and humans behind it. And in 2025, with AI touching billions of lives, ensuring that it’s ethical, transparent, and bias-free is no longer optional — it’s essential.

This article explores the three biggest challenges in AI today — bias, ethics, and trust — and what businesses, regulators, and researchers are doing to solve them.

What Is AI Bias?

AI bias occurs when an algorithm produces unfair or prejudiced outcomes, often because it was trained on biased data.

Real-World Examples of AI Bias

-

Hiring tools filtering candidates unfairly: Some AI recruitment systems have been shown to downgrade women or minority applicants due to biased training data.

-

Facial recognition errors: Studies revealed that certain AI systems misidentify people of color at much higher rates than white individuals.

-

Loan approval discrimination: AI-driven credit scoring can reinforce historical inequalities, denying loans to certain demographics.

Why Does AI Bias Happen?

-

Data problems – If the training data reflects social inequalities, the AI will replicate them.

-

Lack of diversity – Limited representation in datasets skews outputs.

-

Design flaws – When developers don’t account for fairness in model building.

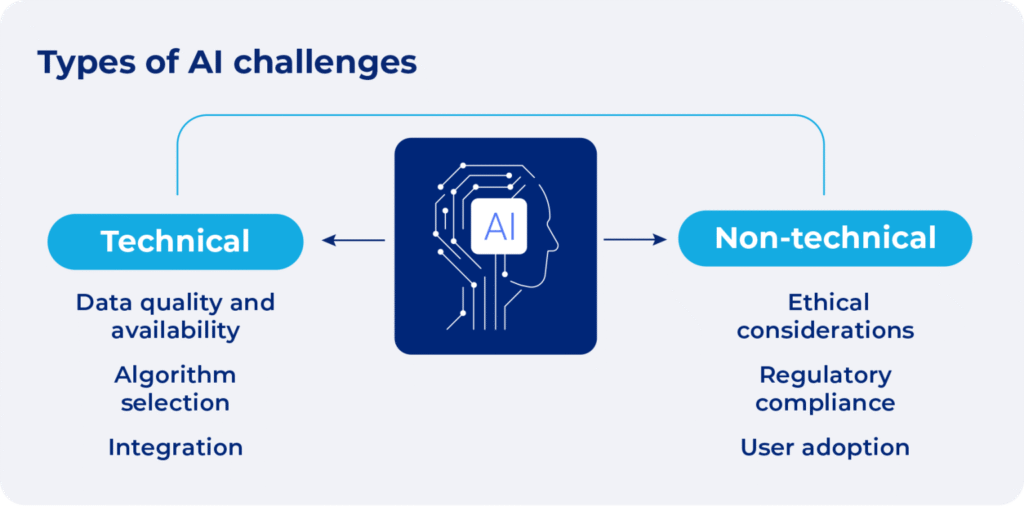

The Ethics of AI Deployment

Ethical concerns in AI go far beyond technical glitches. They revolve around how AI is used in society.

Ethical Dilemmas in AI

Surveillance and privacy – AI-powered cameras and tracking systems raise concerns about mass surveillance.

Deepfakes – Generative AI creates realistic fake videos, fueling misinformation and fraud.

Predictive policing – Using AI to predict crimes has been criticized for reinforcing racial profiling.

Balancing Innovation with Responsibility

While AI has the power to transform industries, unchecked innovation risks causing harm. That’s why many organizations are building Responsible AI frameworks, ensuring fairness, accountability, and transparency from the ground up.

Trust: The Human Factor in AI Adoption

No matter how advanced an AI system is, if people don’t trust it, they won’t use it.

Why Trust Matters

Explainability: Users want to know how decisions are made. For example, if an AI rejects a loan application, the applicant deserves a clear explanation.

Transparency: Black-box AI models undermine trust. Businesses are now focusing on explainable AI (XAI) to make models more understandable.

Reliability: Consistent and accurate performance builds confidence over time.

Regulations and Global Standards

Governments worldwide are stepping in to regulate AI, aiming to protect consumers and set ethical boundaries.

Key Regulatory Moves in 2025

EU AI Act – The world’s first comprehensive AI law, classifying AI systems by risk level and imposing strict requirements for high-risk use cases.

U.S. AI Governance Framework – Executive orders and the NIST AI Risk Management Framework are shaping AI adoption in America.

Global efforts – Countries like Canada, the UK, and Singapore are drafting their own AI ethics policies.

These laws reflect a growing consensus: AI must be safe, transparent, and accountable.

How to Build Ethical and Trustworthy AI

Businesses and researchers can take concrete steps to reduce bias and build trust.

Best Practices for Ethical AI

Use diverse, representative datasets – Avoid over-reliance on narrow or biased data sources.

Conduct bias audits regularly – Continuously test systems for unintended discrimination.

Maintain human oversight – Especially in high-risk decisions like healthcare or criminal justice.

Promote transparency – Adopt explainable AI techniques to clarify decision-making.

Create Responsible AI teams – Many tech companies now have dedicated teams for AI governance and ethics.

The Road Ahead

AI’s potential is enormous, but so are its risks. The companies that lead in the AI race won’t just be those with the most advanced models — they’ll be the ones that prioritize fairness, accountability, and trust.

As consumers, businesses, and regulators demand higher standards, building responsible and ethical AI will become a competitive advantage, not just a compliance requirement.

FAQs on AI Bias, Ethics, and Trust

1. What is AI bias?

AI bias occurs when algorithms produce unfair results due to biased training data, design flaws, or lack of diversity in datasets.

2. Why is ethics important in AI?

Ethics ensures AI systems are used responsibly, minimizing harm, protecting privacy, and promoting fairness.

3. How can AI become more trustworthy?

By being transparent, explainable, and consistent, and ensuring human oversight in sensitive decisions.

4. What role do regulations play in AI ethics?

Laws like the EU AI Act establish rules to ensure AI is safe, fair, and accountable, especially in high-risk industries.

5. What is Responsible AI?

Responsible AI refers to frameworks and practices that ensure AI development and deployment prioritize fairness, accountability, and transparency.

CTA

The future of AI depends on how we tackle bias, ethics, and trust today. If you’re building or using AI, make responsibility part of your strategy — because the most successful AI is not only intelligent but also ethical.

helloI really like your writing so a lot share we keep up a correspondence extra approximately your post on AOL I need an expert in this house to unravel my problem May be that is you Taking a look ahead to see you

Just wish to say your article is as surprising The clearness in your post is just cool and i could assume youre an expert on this subject Fine with your permission allow me to grab your RSS feed to keep updated with forthcoming post Thanks a million and please keep up the enjoyable work

I was recommended this website by my cousin I am not sure whether this post is written by him as nobody else know such detailed about my difficulty You are wonderful Thanks

I have been browsing online more than three hours today yet I never found any interesting article like yours It is pretty worth enough for me In my view if all website owners and bloggers made good content as you did the internet will be a lot more useful than ever before

I’ve been following your blog for some time now, and I’m consistently blown away by the quality of your content. Your ability to tackle complex topics with ease is truly admirable.